Choir VST plugin

Plogue’s Alter/Ego is a new voice synthesis platform. It’s free and can run as standalone software, VST, AU, or RTAS/AAX plugin in 32 or 64 bits. It currently has one voice available – Daisy, who is also free.

Voice synthesis is in a way the ultimate challenge when it comes to fooling the human ear – we humans are very good at noticing when something in another person’s voice is off, for example when they’re not as honest or confident as they’d like us to think. This carries over to noticing anything wrong or unnatural in synthesized voices as well.

In the interest of full disclosure, I’ve been beta-testing the Alter/Ego vocal synthesizer before the release and I’ve also released some sample libraries for Plogue’s Sforzando sampler.

Technology

Alter/Ego is based on Plogue’s Chipspeech platform, which recreates early attempts at synthesizing voices using vintage hardware up to 1984. This is quite a different starting point than other voice synthesizers, such as Vocaloid or UTAU, or vocal sample libraries with wordbuilders like Realivox Blue or the Virharmonic choirs. There are more than two dozen controllable parameters controlling how the voice recordings are transformed into audio output, all linked to MIDI CC. Some are pretty obvious, like helium. Others, like fem factor, wave speed or impulses take some trial and error to get a feel for what they really do to the sound. Vibrato alone gets nine parameters. English and Japanese are the supported languages.

Daisy is based on recordings of the voice of one person (Crusher-P, who also produces music using ), but is capable of both female and male voices as well as polyphonic choirs, whispering and downright out-there weirdness. As a way of explaining how one voice can produce all those different sounds, Plogue have cleverly made her a time-traveling robot. More than a voice, Daisy is also a character – from what I see on her she currently seems to be romantically involved with Dandy 704, the Chipspeech voice based on the technology IBM used to synthesize the song “Daisy Bell” all the way back in 1961.

Sound

Daisy seems at her best with higher female vocals – she sounds most convincing and powerful there. Male vocals sound more synthetic and more subdued. Cartoonish voices with the helium turned up also sound good, in an obviously unnatural way.

Daisy is a robot but she can sound quite human, especially when singing longer syllables. It’s quite an eerie feeling when after a few robotic words a longer note shows up, vibrato kicks in, and Daisy suddenly sounds very human for a second or two, before suddenly turning robotic again. More whispery sounds also sound more human. Automating the vibrato (or controlling it via mod wheel) and expression can sound quite expressive and emotional. Other parameters can also add emotion in more subtle ways, for example changing the impulse width to make the sound slightly less smooth and more gritty.

One sound I haven’t been able to get is a very aggressive or dirty vocal – Daisy’s always too clean and nice to ever sound much like Tom Waits or even Katy Perry. Extreme metal vocals are not really possible, either. Lower notes can sound a bit growly, though, so maybe distortion or other external effects can bring that out.

Mixing these vocals isn’t very difficult – you can put the same effects you normally would put on a human voice, including harmonic exciters and sweeping resonant filters. Just skip noise reduction and pitch correction. When using multiple instances for backing vocals, though, they will need to be treated differently to help separate them, because they’re all based on the same set of recordings so they’ll be much more in sync with each other than multiple human vocalists ever would – though if you want you can have one polyphonic robot singer producing both male and female voices at the same time.

But you’d rather hear Daisy instead of just reading about how she sounds, right? So, here is a demo produced by PianoBench.

At this point nothing is known about future Alter/Ego voices, but Daisy has been described as a bridge between the vintage technology of Chipspeech and the future.

Workflow

Singing something is quite easy – simply load the plugin into your DAW, load a voice preset, type some lyrics (or even load some preset lyrics), and give it some MIDI notes. Inputting an entire song doesn’t take very long, either. My first attempt at punching some sheet music into piano roll and typing in lyrics sounded recognizable, but very robotic, and the timing of some of the syllables wasn’t quite right. So, you have to learn some tricks, which users of other vocal synthesizers will probably also recognize – moving notes for syllables which start with certain consonants forward a bit, so the first vowel of the syllable sounds on the beat, leaving short rests between notes to give the previous syllable some time to decay, and replacing lyrics with phonetic information to get the transitions between words to sound natural. This seems to be true of both English and Japanese – I got a Japanese-speaker to test this for about an hour, and she mentioned that to get things pronounced correctly she’d replace some of the written syllables with different ones. You can get really deep into this, and it’s a skill set of its own, but at least maximizing intelligibility doesn’t seem to involve constant manipulation of parameters like syllable attack time or phoneme speed to get them just right for every individual syllable. Playing notes with a MIDI keyboard seems to work slightly better than punching them into piano roll, but the notes still need tweaking afterwards.

So, trying to sound as human as possible takes a significant amount of work and skill. Even if you’re trying to sound robotic, tweaks of timing will make things sound considerably more musical and intelligible. Another challenge is keeping the lyrics in sync with the music when skipping to a different part of the track. You have to make sure that the lyrics are going to be in the correct place, as they are not permanently linked to the notes. This is probably the most awkward aspect of the plugin. Sure, you get used to keeping things in sync, but not having to do it would be nice. You can’t expect a vocal synthesizer to be as convenient to use as a virtual analog synth or drum machine, though.

YOU MIGHT ALSO LIKE

Share this Post

Related posts

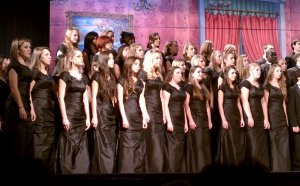

Show Choir dresses for sales

EVAN CAIN, Account Manager Carmel High School, Ambassadors I think my favorite memory from show choir was the last time our…

Read MoreChurch Choir Devotions

On Saturday, September 13, 2014, new and returning members of the National Lutheran Choir gathered for their first rehearsal…

Read More